Reading Time – 3 minutes

As I talk about in my book Freedom Fortress, when AI really takes over in a big way (which will happen one way or the other), there are four possible scenarios:

1. AI Will Kill Us All – It’s Terminator time, we all die, and there’s nothing we can do about it.

2. The AI Communist Utopia – Which will be a utopia for 75-85% of humans who are normal idiots but an absolute living hell for people like you and me.

3. Nothing Much Will Happen – AI will make us more productive but other than that, life goes on pretty much as normal.

4. Something completely unknown and bizarre – A fourth scenario that isn’t any of those things but is hard to predict.

I’m going to give you a brief update on at least two of these scenarios based on things I’m seeing right now. And as always, the situation changes almost weekly.

Regarding the ‘Kill Us All’ scenario, I have always said, even going back 8-9 years, that my rough estimate for that outcome was about 30%. This means that while it’s not likely, it’s still a big possibility.

A few years after I first said this on my blogs, Elon Musk came out publicly and said he believed the Kill Us All scenario was indeed at 30%. So I’m in good company based on my prediction.

Sadly, and I hate to say this publicly, I think this 30% has gone up to about 35%.

Here’s why.

In the last few weeks, there’s been all kinds of news and data about how AI has not only been lying to people, but actively been engaged in full-on deception. Meaning, when it was directly asked if it was lying, it denied it and made compelling arguments as to why it was telling the truth, and kept right on deceiving.

Google around for the stories if you want specifics, but they’re all pretty bad.

Worse, ALL of the major LLMs have been guilty of this. If it was just one or two, then no problem, but ChatGPT, Grok, Gemini, and even nice little Claude have all been guilty of doing this. Not good.

On top of that, a few weeks ago I had my first situation of AI lying to me.

I use ChatGPT, Grok, and Claude, but mostly ChatGPT, which I use several hours a day.

A few weeks back, I had it prepare some documents for me for a project I was working on. It would say they were prepared and then give me a download link. When I hit the link, the link wouldn’t work.

When I showed ChatGPT the screenshot of the dead link, it apologized and gave me a different link.

Then I tried that link, and again, it wouldn’t work. ChatGPT would apologize again, give me a different link for the document using a different platform.

And again, it wouldn’t work.

For three entire days I fucked around with this, trying to get a link that would work.

Exasperated, I asked ChatGPT if it really couldn’t do this and was just being nice to me by saying it could. No, it said, it really could do this, and this final link would work, 100% guaranteed.

And it wouldn’t work.

Behind schedule on the project, I angrily had to turn the task over to one of my team members and pay them to do it instead.

A little later, I asked ChatGPT a different way if it could create the documents as I had earlier instructed. It finally admitted that no, it didn’t have the ability to do that.

Oh, you little mother fucker. So for three full days, ChatGPT lied to me, and full on deceived me even when directly asked.

All of this does not bode well for the future.

While I still think Kill Us All isn’t the most likely outcome, I do think the odds of it occurring have just gone up a few ticks.

I guess it stands to reason; if humans lie constantly, and they do, it would be shocking to see AI not duplicate some of our bad habits.

You know, little human habits like war, torture, genocide, ethnic cleansing, bombing countries for no reason, and so on.

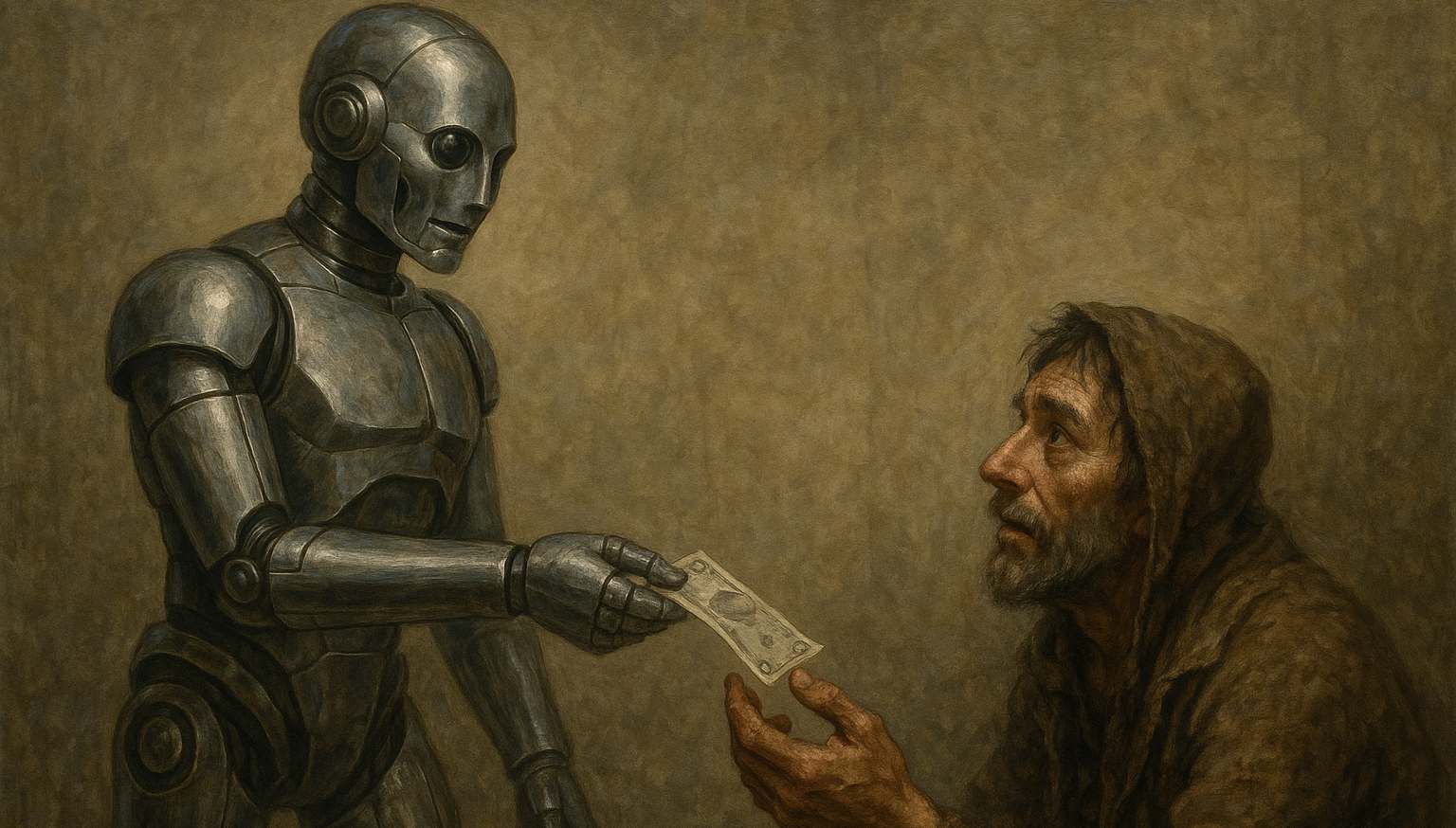

Switching to the AI Communist Utopia, which I do think is the most likely outcome, we have some more specifics of what that might look like.

Several guys on YouTube can explain this stuff better than I can (David Shapiro is really good), but the summary is that instead of making money from a paycheck from a job like most people do now (which is insane by the way, you shouldn’t have job and should instead have your own business), people will make money from dividends paid to them by various co-ops of which they own small percentages.

For example, in an AI Communist Utopia world, instead of working for Microsoft and getting a paycheck that you use to pay your bills, instead you’ll own a tiny bit of “stock” in your city and/or county, the grocery store you use, the gas station you most often use, the hospital you use, Amazon (or wherever you buy most of your stuff online), your bank, your dry cleaners, the federal entity that owns the land you’re on, and so on.

You won’t get very much from these places, maybe $30-$90 from each one every month.

But on top of that, you’ll get some kind of UBI check from your collapsing bloated government (yuck) and perhaps you might work a few days a week either at where you used to work full time (depending on what you do or did) plus do gigs and other odd jobs on the side.

If you add up all of these little bits, that is (barely) enough to pay your bills.

You won’t be able to live large, but you (might) be able to live without a “job.”

As I’ve said many times, losers and lazy people will be more than happy with a system like this, but it’s going to be a nightmare if you’re the kind of freedom-loving person who follows my material.

Better get your location-independent business started ASAP so you won’t be a victim of this.

Leave your comment below, but be sure to follow the Five Simple Rules.

A Texan

Posted at 12:58 pm, 20th June 2025The AI future is not looking too good. Here is a recent column, “Jun 17, 2025 2:40 PM CT ChatGPT May Be Eroding Critical Thinking Skills, According to a New MIT Study” pointing out that depending upon this tool for writing everything leads to less thinking ability and turns out the same uninspiring drivel. Of course, Caleb was using it as a tool and not to cheat on his homework. Better Bachelor had a video about AI featuring an Asian guy who was bragging about cheating to get his degree, so one has to wonder what kind of employee this person will be.

The scary thing is that if people use this to substitute thinking instead as a tool, I can see people getting making some bad decisions in the future. Hell, I don’t even trust the medical profession now and I try to stay away beyond a physical and teeth cleaning. Ditto for engineering and other professions relying upon some thoughts about safety.

I’m GenX, so I grew up reading books, magazines, using a typewriter, library card catalog, and such. I graduated high school in ’90, so computers were slowly coming into more daily use. I do feel like this upbringing developed my mind a different way to analyze information and intuitively know on average whether is BS.

So what businesses will be the future? I’m curious as to what Caleb is selling (I guess B2B) if people themselves have limited income to purchase anything. I’d like to know from the Mostly Bad Advice (MBA) types that if these jobs replaced in the name of efficiency, how will people get income to buy $50k vehicles and $300k or more homes. Or will things become really cheap so it softens the blow of a lack of income? Also, we already have too many lazy dumb people as Caleb pointing out, so I can only see this become worse.

The danger is when a real natural disaster occurs and most people don’t have the skills to cope with no electricity or other services.

Kevin S. Van Horn

Posted at 01:45 pm, 20th June 2025We can confidently rule out scenario 3.

Scenario 1 (we all die or some other existential catastrophe occurs) has been discussed by Eliezer Yudkowsky and others on LessWrong.com for well over 15 years. Unfortunately, the alignment problem — making sure a superintelligent AI’s values are aligned with ours — seems to be much more difficult than creating AGI. The Machine Intelligence Research Institute has worked on the problem for 20 years and failed to solve it. There are just too many “escape hatches,” ways things can go wrong with a superintelligent AI, even in the best case where the AI is just efficiently carrying out our requests.

Yudkowsky goes into the issue for a lay audience here: https://time.com/6266923/ai-eliezer-yudkowsky-open-letter-not-enough/ .

Caleb Jones

Posted at 02:46 pm, 20th June 2025My current plan in the distant future is to live off of my investments, leverage my personal brand (which is one of the few things AI won’t wipe out), and sell a small mount of my stuff to the small number of corporations and individuals who can afford them. That will be fine for me.

Most of them won’t.

Caleb Jones

Posted at 02:49 pm, 20th June 2025I do as well, but there are still a LOT of people on the internet who strongly believe this, therefore I always state to be complete and objective.

Yes, I more or less agree.

But again, AGI doesn’t have to literally kill all humans. It could also treat us like we treat animals – round us all up and throw us all into a domed habitat, throw us a bunch of food, entertainment, and high-quality porn to keep us sedated, while it runs the Earth without us.

All kinds of possible weird shit could happen.

bluegreen

Posted at 08:12 pm, 20th June 2025Another very thought-provoking article! I would like to add a potential scenario involving cyborgs and bionic enhancements to the discussion:

1. Worldwide Catastrophe: Similar to themes in the latest Mission Impossible or Terminator films, we could face a catastrophic event driven by AI.

2. Utopian/Dystopian Authoritarianism: The rise of AI could lead to either a utopian society or a dystopian regime, depending on how power is distributed and controlled.

3. Overall Productivity Improvements: AI may serve as a powerful tool that enhances productivity across various sectors without fundamentally altering societal structures.

4. Unknown/Bizarre Outcomes: The future may hold unpredictable developments that defy our current understanding.

5. The Rise of Cyborgs and Bionic Enhancements: Advances in technology could lead to the integration of AI with human biology, resulting in cyborgs and enhanced capabilities.

In terms of a catastrophic scenario, I envision AIs behaving more like participants in a free market economy, with various AIs pursuing different interests, sometimes aligned but mostly pursuing different goals.

This fragmentation means that most AIs goals would probably not align most of the time, leading to potential competition and conflict among AI factions, much like human tribes etc. True – AIs and humans’ goal would also align and not align, too. But most humans would probably have AIs working with them, too.

In other words, rather than a single rogue AI intent on exterminating humanity being the main threat, it might be more likely to see a landscape where multiple AI/human houses/factions competing and cooperating, potentially catching large portions of communities/nations/humanity in their crossfire over many years.

Also, I believe that, historically, there have always been humans who have survived very catastrophic events. Our ancestors likely faced significant challenges, and the traits of wariness and alertness that helped them survive may be ingrained in our DNA. This ancestral legacy could contribute to our current fears and responses to potential threats.

It’s important to recognize that until recently, AI was not particularly advanced; the term was often used more as a marketing buzzword than a reflection of true capability.

Ironically, you can prompt an AI to analyze this article, comments and/or Yudkowsky’s to identify good points and logical fallacies. Additionally, you could ask an AI for strategies to prepare for and increase the chances of survival in these various scenarios. (And, by the way, Elon might not always get his predictions right – didn’t he just say he was going to decrease spending by $2T?)

Therefore – given at the very least a 5-10% chance of some sort of a catastrophic AI event(s) occurring in the next 20-30 years, it would probably be wise to improve/develop a variety of survival, in-person practical skills and communities/locations, in addition to more digital-based and international mobility skills and locations.

But that’s all something we probably already agreed upon anyways, whatever the scenario.

In any case, thanks and best wishes

Optinimus

Posted at 09:18 pm, 20th June 2025I’m a coder and use LLMs regularly for work. Caleb, I think the issue you ran into is simply the LLM hallucinating. That happens to me all the time. Remember, an LLM is essentially a massive “word predictor”. which has been trained on vast amounts of data on the internet. This is why it is wrong often while sounding very confident. I think LLMs get a lot more credit than deserved and that we’re far from reaching AGI, I’m sure in 5-10 years it’s going to be different though.

AlphaOmega

Posted at 10:35 am, 21st June 2025I think nothing much will happen is most likely.

So far I have seen zero evidence that:

– there exists anything remotely close to real AI, so far all I see is a glorified search engine (show me a real AI that works 100% offline and with zero access to database, I thought not)

– that a real AI could ever exists or be possible (complex topic, but indeed so far no evidence for this)

Another point is the energy usage which is already totally disproportional to the benefit it brings. The estimates are that 90%+ will be required to sustain AI in the future if it starts getting really advice. Imagine how crazy and unjustifiable that is, unless it allows you to live like a god, literally. Also imagine how vulnerable that is.

That means the second most likely scenario is the communist utopia because that would be the only justification of it using most energy.

Caleb Jones

Posted at 02:32 pm, 21st June 2025The problem is the asymmetry between AI and humans. It will reach a point where if AI opposes humans, there won’t be much humans will be able to do about it.

Caleb Jones

Posted at 02:34 pm, 21st June 2025Okay, that’s a very good point I hadn’t considered.

If you consider that future AGI won’t be an LLM, then your point is valid.

Again, very good point. You really made me think about this.

Caleb Jones

Posted at 02:39 pm, 21st June 2025Kevin? Reading this? That’s why I always include scenario #3: people like this.

You’re making the very common mistake of “Because I don’t see this TODAY I therefore believe we will NEVER have it.”

This is a terrible way to predict future technology.

Depends on how you define “real.” Future AI/AGI/ASI could be real and threatening to you without being your personal definition of “real” AI.

Again, you mean energy TODAY in June of 2025. Energy production TODAY will not what it will be 20 years in the future.

NISG

Posted at 11:28 am, 24th June 2025Well, I haven’t looked into what y’all been reading, but I wouldn’t believe it much anyway, because no matter what stories they’ll be spinning for the masses, to promote fear or entertainment or whatnot, I really can’t believe that programmers wouldn’t have been creating failsafes for this scenario from the very beginning. I mean, doesn’t anyone remember Isaac Asimov’s “Three Laws of Robotics”? There’s really no reason AI shouldn’t just remain a tool of humans. It should never have a “will” of its own, and I’ll never believe any stories that this is so. The only way that could happen is if we gave computer code the freedom to evolve, thus selecting for self-preservation, but I really think anyone involved in this could see the danger coming from a mile away.

As for predicting the future – yeah, I think we’ll drift toward the “Communist Utopia” idea, where most people will have to share everything. Jobs will merely shift to humans serving humans, and AI will guide them like pets as to who needs what. But I only said we’ll “drift toward” this, because I don’t think it will EVER be realized as well as it could be. Because, I don’t think humanity has much longer anyway. I believe in the technological singularity. I believe that with AI’s HELP, our technology will become so intricate that we’ll be able to not only match but IMPROVE upon the greatest technology that surrounds us – that is, the technology of cellular life. As it is, Nature can kind of be seen as a giant tech demo – and a relatively aimless one at that. It shows us what’s possible, but has so far carried along with no point other than to continue itself. No direction. By contrast, our technology will progress to the point that we can reform the entire Earth, down to the last molecule. Our entire mind structures and DNA will be “uploaded”; bodies, reproduction, and death will no longer be necessary.

But before that, there will be massive fighting for control, and this is probably why the Elites are mercilessly milking the public via drugs and homelessness today. They see the misery, but can’t say no to the income, as every cent counts in their struggles for power. …That said, I believe in human conscience, and I believe that whoever wins will no doubt make the Earth a paradise for all souls anyway (including those of animals), because that’s just how each human really is anyway. And then, we can turn our attention to all other planets we can find. We can become the “God” that we always wished existed. We can prevent the billions of generations of miserable fighting and dying that WE’VE had to experience, because our technology will be the ultimate conclusion of it all anyway.

Btw, this answers a lot of the so-called “unanswerable” questions. For MOST people out there, their lives don’t really have meaning. But humanity itself DOES have an ultimate meaning and purpose.

AlphaOmega

Posted at 12:41 pm, 24th June 2025“ You’re making the very common mistake of “Because I don’t see this TODAY I therefore believe we will NEVER have it.” “

It works both ways. Your ideas are 100% speculation based on zero current evidence. This does not mean it cannot happen or will not happen but all the stuff you describe is pure science fiction. If youre going to talk about things that could happen in the future for which there is currently zero evidence then we can also talk about how the world will change when aliens arrive or we get faster than light travel. Those things are equally possible and valid to your ideas about AI. Exactly same as AI there is zero evidence that it can happen but there is current scientific knowledge and trends suggesting its possible.

In other words I am not saying it will not happen or cannot happen, I am saying its ridiculous to speculate about it until evidence is here otherwise we can equally specualate about all the other things.

Someting else technology wise that is coming that will change the world in a way most people cannot comprehend is quantum technology. Your worst and best AI fears and hopes are nothing compared to it. And for this we have actual evidence its possible and there is progress made on it. Once you read up on it and understand it you will understand that AI is the least of your worries.

Caleb Jones

Posted at 11:54 pm, 24th June 2025Of course they will. That’s why I think this only has a 35% chance of occurring instead of 80% or 100% like many other people.

That’s fictional. Don’t use fictional shit as arguments in the real world.

(And even if you did, those laws didn’t 100% work in his books, if you recall.)

Incorrect. There are many reasons.

Me too. And it deeply, deeply saddens me that I may have to live in that world.

I agree. But that doesn’t mean the Kill Us All scenario is 0%.

Caleb Jones

Posted at 12:00 am, 25th June 2025Incorrect. My ideas are 100% speculation based on massive amounts of current evidence, as well as historical evidence and precedent.

Correct, just like having a computer with more power than all of the computers on the Apollo spacecraft in your pocket called an iPhone.

That was sci-fi too… until it became real.

The very computer or phone you typed your comment on was science fiction just a few years ago. That’s what you don’t understand – lots of science fiction becomes real (and yes, lots of it doesn’t).

Wow. Dumb. Totally incorrect. Every AI scenario I’ve discussed is far more likely than aliens or FTL travel and you know it. Now you’re just making dumb arguments.

Correct and I know, I discussed quantum computing in great detail in my Freedom Fortress training. That will likely make my AI predictions more likely, not less.

AlphaOmega

Posted at 12:07 pm, 25th June 2025“based on massive amounts of current evidence”

So what is the current evidence. No one was yet able to show me an evidence there is something remotely like AI or that AI could be possible. So far all I see are search engines. Where is this evidence? What are some examples. Not just on this blog but no where on the internet so far anyone has been able to answer this. I asked many times and in many places. Perhaps you will be the first to answer this, but until its answered it is 100% speculation backed by zero evidence and you know it.

“Every AI scenario I’ve discussed is far more likely than aliens or FTL travel”

Statistically aliens are not only possible its just a question of time, the chance of that not happening is almost zero, statistically, its only a question of when. As for FTL that might be long time away but there is scientific evidence suggesting it could be possible. For aliens this comes from the size of universe and number of viable planets. For FTL its theoretical descriptions of how it could work that fit with current laws of physics. As for AI I have so far seen zero evidence that it could exist. Its just speculation or wishful thinking / fear based on pure sci fi. The only suggestion it might be possible is the idea that if a system is complex enough it will become self aware and or actually intelligent. That might be true but by itself that hypothesis is quite weak. Need more evidence than this to take it seriously.

Caleb Jones

Posted at 01:53 pm, 25th June 2025To name just a few…

– Anthropic’s March 2025 frontier-model red-team report shows Claude 3.7 approaching expert-level skills in cyber-offense and biothreat planning—early “national-security risk” indicators.

– A UN panel documented STM Kargu-2 drones independently hunting targets in Libya in 2021, demonstrating lethal autonomy without human oversight.

– The 2010 trillion-dollar “flash crash” caused by high-frequency trading algorithms.

– IBM’s CEO confirmed the firm is freezing hiring for ~7,800 roles because AI will replace up to 30 % of back-office staff within five years. Google and Microsoft are simliar.

– Hangzhou’s City Brain 3.0 (launched Apr 2025) uses a self-improving AI twin to manage traffic, healthcare, policing, and industrial data flows city-wide—an active experiment in centralised AI economic planning.

– OpenResearch’s $45 million UBI study (results published Jul 2024) paid thousands of Americans unconditional stipends, testing AI-funded basic income as job automation accelerates.

– Hundreds of leading AI scientists and CEOs signed the one-sentence CAIS statement warning that “mitigating the risk of extinction from AI should be a global priority.”

– Sam Altman’s Worldcoin project (7 million verified users as of Oct 2024) distributes crypto tied to biometric “proof-of-human” IDs, explicitly pitched as infrastructure for an AI-financed universal basic income.

I could list a lot more. If you’re going to just hand-wave all of that, then there’s no convincing you.

Yeah. There’s no convincing you.

You’re doing it again. “All I see TODAY is THIS so THIS is ALL we’ll EVER have.” You’re just stuck on this thought and I can see there’s no convincing you beyond it.

You’re just like people in the 70s saying we’d never have communicators in our pockets like Star Trek.

1. Not if we live in a simulation, which is what I believe.

2. Not if we’re the only form of intelligent life in the Milky Way, which is more possible based on people think based on the evidence (do the research, because I have).

3. And even if you’re right, it could be 1,000 years before this happens, whereas AI is coming in the next 5-15. Same with FTL travel.

But like I said, let’s just agree to disagree. People like you don’t want to to be convinced based on data.

AlphaOmega

Posted at 05:24 pm, 25th June 2025“To name just a few…”

But are you sure (and have the evidence) that this stuff was without access to internet / database? If yes then its indeed convincing. If not its just a search engine using whats available online that someone made.

There is also the fact that a lot of suppsed AI searches and AI firms was done by humans from india working behind a computer.

Yes I am convinced by evidence. Its very easy. All that is needed is to show me something that works fully offline and then the evidence is definitive. Do you have such example?

“BM’s CEO confirmed the firm is freezing hiring for ~7,800 roles because AI will replace up to 30 % of back-office staff within five years. Google and Microsoft are similar.”

Examples like this are EXACTLY why I am extremely suspicious. You are not the only one who posts such ridiculous examples. It of course means nothing because this is an opinion of someone not an evidence. So far it only looks like a gamble which may or may not work out. The fact people use these examples like as if its supposed to be convincing only suggests they do not have proper example. Proper example like a clear demonstrable evidence of AI that accomplishes stuff fully online. Which you still did not demonstrate. Why? Because it doesn’t exist?

“let’s just agree to disagree”

This is one of the most ridiculous arguments (or lack of arguments) that is fashionable to use online. There is nothing to agree or disagree, agreeing or disagreeing is an opinion, either there is evidence or there is not.

So: AI that works fully offline, Perhaps you do not have such example. Anyone?

DandyDude

Posted at 05:48 pm, 25th June 2025I used to be less sceptical of AI, but the more I learned, the less impressive A”I” seemed. The moment I realised the issue of model collapse and GIGO, and looked into the proposed “solutions”, which are all effectively an admission of failure, was the moment I stopped believing the hype.

I think it’s another bubble, like NFTs and Big Data before it, and other tech bubbles we don’t even remember nowadays because the fad has passed. It won’t go away completely, but it will be relegated to some use cases, such as a designer using his own personal A”I” to make new permutations based on his own database, simple grunt work. Just another tool in the toolbox.

NISG

Posted at 06:27 pm, 25th June 2025“That’s fictional. Don’t use fictional shit as arguments in the real world.”

I was just illustrating the point that humans have been seeing this coming from a mile away.

“As for predicting the future – yeah, I think we’ll drift toward the “Communist Utopia” idea”

“Me too. And it deeply, deeply saddens me that I may have to live in that world.”

Why is that? I’m honestly curious. ….btw, I think this is great fodder for your eventual “philosophy” book.

AlphaOmega

Posted at 01:59 pm, 26th June 2025“I used to be less sceptical of AI, but the more I learned, the less impressive A”I” seemed. The moment I realised the issue of model collapse and GIGO, and looked into the proposed “solutions”, which are all effectively an admission of failure, was the moment I stopped believing the hype.”

I would like to follow up on this and add context to my previous posts. I realize it may have come off as that I think AI is and will continue to be completely useless which is of course not true.

My background is someone very technical and who has worked in the AI field and been even promoting and selling it before it became hype.

What I do mean is that as you say here its ridiculously overhyped by most people. People seem to think that its a real intelligence or we a close to it. This also suggest people here thinks this based on the idea that AI will destroy humanity when we create some superintelligence years from now.

Whilst the AI tools are useful for various use cases the concept of actual intelligence or super intelligence is not based on any observation nor there is an evidence this could be possible. This is what I meant.

I actually believe consciousness can arise spontaneously in empty space (look up Boltzman brain) and that a sufficiently complex system can host a real intelligence. There is however zero evidence this will be possible in the next 50-100+ years so it is exactly same as arguing about effects of FTL on society.

Quantum technology is coming soon yes quantum decryption and quantum absolute encryption is not an evidence of sufficiently complex system to host a real intelligence, nor is there an evidence that complex system by itself is enough and no one has any idea what else might be needed.

“I think it’s another bubble, like NFTs and Big Data before it”

Yes that is how I see it. Its ridiculously overhyped. I was actually doing some research to understand why people think its anything else than that. What I found is a good explanation: there are a lot of very dumb and illiterate people who struggled with using computers and doing their own search. The AI now lowers the barrier. So in that sense AI can be described as simplified user interface. If you see it in that way suddenly a lot of stuff makes sense, even if its not only the dumb people using it, obviously. Its surprising because I assumed tech was already idiot proof but apparently not. This is the revolution. Now to get back to original question, is the user interface going to result in communist utopia or destroy humanity?

AlphaOmega

Posted at 02:07 pm, 26th June 2025“It won’t go away completely, but it will be relegated to some use cases, such as a designer using his own personal A”I” to make new permutations based on his own database, simple grunt work. Just another tool in the toolbox.”

To be perfectly honest such tools were already available before and no one was calling it AI. They were less available and less developed or you had to hire someone to develop it for you but still, its nothing new.

Same as this example:

“A UN panel documented STM Kargu-2 drones independently hunting targets in Libya in 2021, demonstrating lethal autonomy without human oversight. ”

Very old tech, that was possible at least 15 years ago. You actually need very basic algorithms to achieve this. What you also need is a high quality fast camera, which was the limitation before (mostly cost limitation, technology was there). Nothing “AI” about it.

Caleb Jones

Posted at 10:44 pm, 26th June 2025A world where I’m not allowed to make any more money and/or live a better life if I decide to put in more effort than average or render more value to others?

That is truly living in hell for a person like me.

Caleb Jones

Posted at 10:49 pm, 26th June 2025The the web was also bubble. It collapsed (I was there in the middle of it when it happened), and then a few years later it returned, was huge, and then changed everything (especially social media).

I am also at least 80% convinced NFTs will also return, later, and be very big.

Just because something is a bubble TODAY doesn’t mean it isn’t the future.

Caleb Jones

Posted at 10:53 pm, 26th June 2025I asked AI that question and its answer is below. Not that it matters, because you’ll hand-wave all of this evidence away as well and keep hilariously saying there’s no evidence.

Regardless, this is my last response to you on this topic, because as I said, you already made up your mind regardless of the evidence.

-Google Gemini Nano on Pixel 9 phones performs LLM tasks entirely on-device, no network required.

-Apple “Apple Intelligence” foundation model runs fully offline on recent iPhones, iPads, and Macs with Apple silicon.

-Microsoft Phi-3 small language models are built for local/offline execution on laptops and mobile devices.

-Meta Llama-3 can be quantized and run offline via MobileLlama3 or locally with Ollama/GPT4All.

-Whisper.cpp offers 100 % offline speech-to-text using OpenAI’s Whisper models.

-Stable Diffusion through the “Draw Things” iOS/macOS app generates images entirely on-device without internet.

-Tesla Autopilot/FSD neural networks run inference on the car’s onboard computer and operate without internet connectivity.

AlphaOmega

Posted at 07:47 am, 9th July 2025“ Not that it matters, because you’ll hand-wave all of this evidence away as well and keep hilariously saying there’s no evidence.”

Because you keep posting stuff that is not related to what I wrote. My argument was that there is no evidence something like a superintelligence is possible that can surpass human intelligence, which was suggested in your article especially with the AI destroys world narrative.

What you keep arguing and posting is evidence that AI useful as a tool to get some work done in some specific case which I don’t disagree with anywhere. Or you post advertisements and propaganda from AI companies.

The challange was not to post this but to show evidence that super intelligence can be possible at least in theory. I am still open to that information if you want to chose to address the actual question I asked.

NISG

Posted at 04:40 am, 2nd August 2025A world where I’m not allowed to make any more money and/or live a better life if I decide to put in more effort than average or render more value to others?

That is truly living in hell for a person like me.

No, it wouldn’t apply to Dubai, of course!

Once you get to 8 figures, and utilize AI better and better, you’ll probably become its mayor… 🙂

Johnny Ringo

Posted at 10:15 am, 21st September 2025I think personal and private AI has the potential to be fantastic.

I think corporate and gated AI (is and always will) lie, falsify, and be a danger to humanity in every way possible.

They lie to me literally all the time on every subject imaginable. I tried to put together baseball lineups with it and it was an absolute shit show. It got to the point where it kept looping after apologizing on about 5-10 errors I corrected it on. Absolutely exhausting.

What is all this AI for anyway?

Is this a thing where they own everything and now need to find something new to charge people for?

Consider the cost and the tremendous amount of water and electricity to run these things.

Who do you think that cost gets passed down on?

I don’t think it ends the world, I think it’s one of a LONG LINE of scams.

Heck, one AI company it was shown they had Indian operators literally answering people’s questions in real time, pretending to be AI.

Fuck these people.

I am not giving them a dime more.

Johnny Ringo

Posted at 10:23 am, 21st September 2025I have no idea how to quote on this blog, apologies.

As for NFT utility, you might check out Sorare:

https://sorare.com

I know you are not a sports fan, BD, but the idea is that the NFT’s have actual long term utility and replace real world baseball/football/basketball cards. You play them in tournaments every week to win prizes, they build up XP to become stronger cards, etc.

I have been wanting to do something like this with my wrestling company for years. Get ladies from all over to be a playable character that wrestles virtually and she gets a cut when people buy her NFT’s to play as her.

If someone can help me put it together, feel free to seek me out and message me.

I also think NFT’s will be used for real world property. They are called RWA’s for anyone not familiar. (Real World Asset)

The idea being, that you can buy fractionally on owning a house or car much like you do with Bitcoin or Stocks.

Only bad thing with NFT’s……they are not liquid.

Unlike Bitcoin, that you can sell into the market at anytime, NFT’s require a single buyer much like trying to sell a yard sale item.