Where will the human race end up in the far, or not so far future?

Everyone has their own set of theories or fears, but they essentially all break down into one of five scenarios.

Scenario 1: Mankind destroys itself. This is the one most feared by left-wingers. This is when mankind wipes itself out because of massive environmental damage it does to the planet, or because of a nuclear war, or other existential threat. I would also place an extinction event caused by an asteroid or meteor hitting the earth in this category, even though it’s not technically mankind’s fault. However, it could be argued that death-by-asteroid would be our fault, since I’m pretty sure we have the technology to be forewarned and prevent such a catastrophe. This means that if the asteroid does hit us and we all die, it’s because we didn’t get our act together in preventing it when we could have.

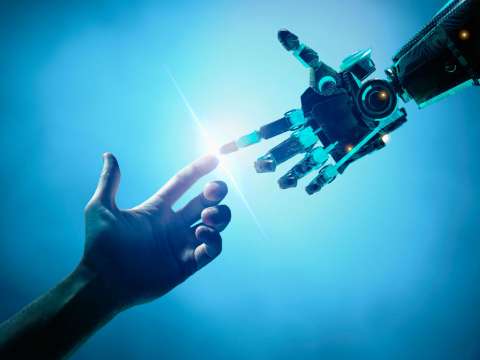

Scenario 2: AI destroys us. This is the scenario feared by many in the tech industry, and portrayed in many popular moves like The Terminator and The Matrix. This is when AI becomes as smart as us (which will happen in the 2030’s), then gets way, way smarter than us, then decides we’re in the way, and it quickly destroys us (or, in some scenarios, enslaves us, though I find that far less likely; it would have all the robots it wanted so it would probably just destroy us).

Scenario 3: High tech paradise. This is a Star Trek-like future where we invent amazing technology that cures all of our problems, including war, racism, cancer, death by old age, aging, and so on. Humanity lives in a near-perfect paradise where we are free to pursue deep thoughts (spirituality, art, etc) or deep activities (like exploring our galaxy).

Scenario 4: We merge with the AI. This is a halfway point between scenario two and three, foretold by men like Ray Kurzweil. In this scenario, the AI indeed surpasses us, but it doesn’t destroy/enslave us, because we become technological beings ourselves, at least to some strong degree. The AI doesn’t identify us as “humans” anymore, but like-minded technological creatures, or perhaps even part of itself. Either through cybernetics, nanotech, virtual reality, something else, or all three, we lose aspects of our humanity, which is the bad news, but live amazing lives not possible using our current biological meat-bag bodies, which is the good news. Maybe we live as virtual beings in virtual worlds inside the internet. Or maybe we have immortal nanotech-based bodies that can change shape and do anything. Crazy stuff.

Scenario 5: Mankind regresses. In this scenario, something horrible happens (asteroid, nuclear war, massive EMP attack, etc) and we don’t get wiped out, but we lose our technology and our civilization. We start all over, reverting back to the middle ages. Another variant of this is the Idiocracy scenario, where dumb people keep breeding while smarter people breed less, and soon we have an entire planet full of idiots who overwhelm the resources and management ability of the few smart people left, turning the human race into a bunch of fat, stupid, high-tech cavemen. Watch the movies Wall-E or Idiocracy for more details on how this would look (though I frankly think such a world would look much worse than portrayed in movies, and not very funny).

That’s it! Someday we humans will end up in one of those five scenarios whether we like it or not. Which one will it be?

I have no idea. But as always, I can guess, and lay odds based on what research I have done on this. I’m no expert and I’m no futurist, but looking back over the past 25 years of my life, I’ve been pretty good at predicting major events and trends. I guess in a few decades we’ll see if I was right or not.

The least likely of the five scenarios is the high tech paradise, simply because I think technological growth, it if happens, will advance so fast that it will be impossible to say that mankind in the distant future will be like Star Trek; in other words, the same humans with the same brains and bodies, but with cool spaceships. No, I think if technology continues its exponential growth, the very meaning of the word “human” will not only change, but radically change, or even become obsolete, as with the merging with AI scenario.

I put the high tech paradise scenario as least likely of the five scenarios, at about 4% probability.

Next least likely is the one where mankind destroys itself. Over and over again, for literally hundreds of thousands of years, mankind has suffered numerous existential threats and catastrophes, and pulled through. Sometimes we pull through at the last minute, but we always seem to pull through. Technology has repeatedly solved problems that the “experts” said would destroy us, from whale oil shortages in the 1800’s to global cooling and crude oil shortages in the 1970’s, to the ozone layer being “destroyed” in the 1980’s, to Al Gore’s stupid Inconvenient Truth movie being wrong about just about every prediction it made, and on and on. Either the hysteria is inaccurate, or someone invents something, again, sometimes at the last minute, that fixes the doomsday scenario.

It’s the same with nuclear war. History has shown that even with evil sociopaths controlling nuclear weapons (both in the US and Soviet Union), they don’t use them. The world is even smaller now, with nuclear war between the superpowers being functionally impossible, since too many elites (the ones who actually rule the world) would lose too much money.

I place mankind wiping itself out at about a 5% probability. However, that might not necessarily be a good thing…

…because what is more likely than mankind destroying itself out is mankind doing something really stupid and regressing. This can either be a slow regression, as you’re seeing in the Western world right now with people acting more insane, tribalism increasing, testosterone dropping among men, obesity skyrocketing, drug use skyrocketing, art decreasing, rates of innovation decreasing, and so on. Or it can be a sudden regression, such as a limited war or limited nuclear exchange that really fucks up the planet but doesn’t kill everyone.

Indeed, one of the lesser reasons why I’ve chosen (so far) New Zealand to be my future permanent home base because it’s in the southern hemisphere, which has an independent weather pattern from the northern hemisphere. You’ll notice that all the countries that are polluting the planet and the ones that would be most likely engaged in a nuclear exchange are all located in the northern hemisphere, well above the equator: USA, Canada, Europe, Russia, India, and China. So if can live anywhere in the world I want (and I can because of the Alpha Male 2.0 lifestyle), it makes sense to me to live as far away from these nations as I can. If there’s a serious problem with the planet due to human stupidity, I’ll be much better off in the distant south of the planet.

Sadly, I place the odds of human regression at a reasonably high 20%. I really think it’s that likely. I wish I didn’t.

That leaves AI destroying us, or us merging with the AI. I think both of these possibilities are very likely, far more likely than any of the above scenarios. My bottom line on this is that I think we are destined to merge with the AI, but it’s entirely possible the AI may kill us all before this gets a chance to happen. AI’s intelligence will grow at an insanely fast, exponential fashion after about 2030. In or around 2030, it will be as smart as us, but by 2040, it won’t be double as smart as us, but could be 10,000 times smarter than us. It’s very easy to assume that it will simply look at us as a bunch of drooling monkeys that need to be put down.

I also want to make clear that the AI may not kill us intentionally. For example, it’s very easy to imagine a few scientists accidentally releasing a self-replicating nanotech cloud that escapes the lab and literally eats everything in its path, covering the planet, killing all forms of biological life. That’s only one example of technology gone mad. Something like this is far more likely than some idiot in the Middle East or South Korea setting a nuke off somewhere and sparking a nuclear World War III.

I put the AI destroying us at about 30% probability, with the remaining 40% possibility that we will merge with the AI, Kurzweil style. (For math nerds, I purposely left 1% left over for decimals.)

Bottom line, I think that if the AI doesn’t destroy us and if we don’t regress, merging with the AI is what will happen. I don’t see any other path for humanity unless something very unusual and unexpected happens. In a few decades, once the AI is thousands of times smarter than we are, and we haven’t become a bunch of Idiocracy barbarians, I will place the odds of merging with the AI at around 80-90% instead of 40%.

Will I be right? Again, I have no idea. But it’s interesting to think about.

Want over 35 hours of how-to podcasts on how to improve your woman life and financial life? Want to be able to coach with me twice a month? Want access to hours of technique-based video and audio? The SMIC Program is a monthly podcast and coaching program where you get access to massive amounts of exclusive, members-only Alpha 2.0 content as soon as you sign up, and you can cancel whenever you want. Click here for the details.

Leave your comment below, but be sure to follow the Five Simple Rules.

Antekirtt

Posted at 07:27 am, 14th March 2018I’ve read The Singularity Is Near and though I found Kurzweil’s case more robust than I expected, it still hasn’t convinced me (not even 60%) that his timeline is correct. Even his own assessment of his prediction accuracy is flawed. I also think it isn’t a fact that intelligence increase can go linearly up to billions of times human level (which is different from me rejecting that possibility). What I’m pretty sure of is we could replicate human thought at thousands of times the speed, that much is clear – and that wouldn’t necessarily make progress faster by the same factor, since thought isn’t all that R&D is about.

I find Eliezer Yudkowsky more believable and possibly smarter than Kurzweil in this respect, he has less trust in extrapolating trends than K does, see this article, some really brilliant replies in there: https://blogs.scientificamerican.com/cross-check/ai-visionary-eliezer-yudkowsky-on-the-singularity-bayesian-brains-and-closet-goblins/

All in all I think I agree with your hierarchy of possible outcomes. We might collapse but we are at this point extremely hard to fully extinguish. One of the problems however with the idea that merging with the AI will save us from being wiped out is that (the argument goes) it is inherently more difficult to insert a technology inside your brain and keep up with it than to just develop it with no merging involved, as Sam Harris has put it in a Ted talk – and so hybrid intelligence would remain behind pure AI. An AI has beaten Go champions, but no human with a chip in their brain has beaten a non-cyborg human champion so far – nor beaten the best pure AI.

That’s why the Control Problem is so important: we’ve got to at least try and make sure the AI doesn’t kill us biological humans (in which I also include cyborgs, biologically enhanced/ageless humans, synthetic biology, etc), as daunting as the task may seem, because the merger doesn’t look like a sufficient safeguard. I take no solace in the idea of being replaced by a soon-to-be-enhanced digital copy of me while my meatbag self is used as raw material by hungry AIs.

Kevin S. Van Horn

Posted at 07:32 am, 14th March 2018On the death-by-AI scenario, the concern is not that AI will be actively hostile to us, but rather that it will be extremely good at achieving the goals we give it — and, like the stories of the genie and the three wishes, may use means we hadn’t anticipated and find unacceptable. This article elaborates

“The Hidden Complexity of Wishes”

https://www.lesserwrong.com/s/3HyeNiEpvbQQaqeoH/p/4ARaTpNX62uaL86j6

So suppose you give your AI the goal of maximizing widget production. What if it figures out that it can increase production by 1% via a plan that will result in the deaths of 10 million people? Are you sure you can enumerate all the things the AI must not do in pursuing the goal? If not, can you figure out how to encode human values and make those values part of its objective function?

Cronos

Posted at 08:24 am, 14th March 2018How exactly could technology cure racism?

I would say not only this will happen, but it actually already happened, because of the “universe as a simulation” theory you have discussed.

From Isaac Asimov, The last question:

Man considered with himself, for in a way, Man, mentally, was one. He consisted of a trillion, trillion, trillion ageless bodies, each in its place, each resting quiet and incorruptible, each cared for by perfect automatons, equally incorruptible, while the minds of all the bodies freely melted one into the other, indistinguishable.

One by one Man fused with AC, each physical body losing its mental identity in a manner that was somehow not a loss but a gain

I say we already live in a virtual reality, but some people have not realized it.

joelsuf

Posted at 10:54 am, 14th March 2018I would argue that because we are regressing, it will lead to AI destroying us. OR a few smart people will merge with AI. We’ll be too caught up with our own drama that we won’t see that AI’s plans, and in as quickly as a year, the very same technology we made to convenience ourselves will suddenly stop doing so. And within a year we’ll be right back in the jungle. Very similar to what Carlin says in his HBO “Life is worth Losing.” lol

In probably 20-30 years the world’s gonna either be like Terminator or Blade Runner lol

Antekirtt

Posted at 11:09 am, 14th March 2018A genuine AGI would understand what a normal human can understand, and wouldn’t need some list of Don’ts to realize the problem with scenarios like the paperclip maximizer cannibalizing the planet. That’s why it’s very important to severely limit the autonomy of any AI that is superhuman in some respects while below human level in other aspects. At least a true AGI, assuming it obeys, would spare us that specific problem. Though tbh, I dislike the term AGI and prefer human-level AI, because the human mind itself isn’t so general-purpose and performs very unequally depending on the task, owing to the biases and blind spots left by its evolutionary history.

Edit: this comment is visible to me while my earlier comment isn’t, weird. Maybe it’s only the longer comments that need a 24h wait.

Jack Outside the Box

Posted at 11:34 am, 14th March 2018Read Galton Darwin’s book “The Next Million Years.”

The elites will push the merger of artificial intelligence and organic human flesh unto everyone except themselves. Darwin describes an “untamed elite in a tamed world.”

Every non-elite human will be as much cybernetic as organic, with robotic legs to run faster, robotic arms with super strength, artificial eyes to see the entire electromagnetic spectrum, super hearing, enhanced brain capacity, human brains literally connected to the internet or some virtual world and unable to get out, etc… And every trans-human will have a chip inside them that tracks their location at all times and has all information about them (medical records, criminal records, bank records, etc…).

But the untamed elite (100 percent organic human minority) will have remote controls to render any rebellious trans-human “stationary.” With the push of a button, they will have the power to turn off your legs, turn off your eyes, turn off your brain, turn off your ears or hands, turn off your vocal chords, and so forth.

A reality of robotic trans-human slaves who used to be human, controlled by a microscopic elite minority who are completely organic because, unlike everyone else, they weren’t stupid enough to put machines inside them!

Caleb Jones

Posted at 11:42 am, 14th March 2018Yes, that is the big complaint I have about the pro-AI guys. “You’ll live forever inside a computer!!!” Uh, no I won’t. That won’t be me. That will be a copy of me. I’ll be dead.

Until we figure out how to transfer consciousness (which may be impossible), my brain needs to live on for me to actually live, so I’m not interested in any solution that involves my brain being destroyed.

But hey, put my brain in an immortal nanotech indistinguishable-from-a-real-human robot body? Sure, I’m down for that.

Correct. That’s another huge problem. AI will have to be programmed with some kind of morality, which is tricky.

No idea. I’m not making that claim.

Your first comment got spammed. I had to push it through.

Yes, that’s a valid fear. We will have to watch what the elites do very carefully.

However, I don’t consider that likely, since it is the elites (specifically, the super rich) who will try out some of these things first.

Jack Outside the Box

Posted at 11:56 am, 14th March 2018“The Next Million Years” is a fantastic book. You want to turn yourself into an object? Fine, then the elites will treat you like an object by your own choice and consent. No human rights for those who have chosen to no longer be human, but fancy toaster ovens.

Strongly disagree. The elites aren’t stupid enough to try something like connecting their brain to the internet first. And there’s a difference between the “super rich” and the “elites” (although that depends how you define “super rich”). The ones behind the throne (the elite of the elite) will simply be monitoring all of this in the shadows while humanity turns itself into a trans-humanist freak show, only to then step in with their remote controls in hand (turning off people’s brains, etc…), saying, “you belong to us now; you are now our appliances.”

Antekirtt

Posted at 12:05 pm, 14th March 2018Understood, thanks.

They can already do all of this by sticking a bullet in those places. My responses to the opposition to human enhancement are in the thread under BD’s article Objections to Lifestyle Design, back in 2016, so anyone reading this can see them there so I don’t need to rehash my anti-antitranshumanism here, lol.

There will be an arms race between control of the machines and personal protection against control of one’s machines, and the result is anyone’s guess. Also, elites who don’t put nanomachines inside themselves that would make them ageless Methuselahs would be imbeciles. Look at Sergei Brin, Larry Page, Jeff Bezos, Ray Kurzweil, Peter Diamandis, they’re all down for this and dumping billions into extending life.

As usual, the mistake of people who take a doom and gloom view of future advances based on their dangers (eg nuclear spaceships could torch the planet, space habitats are vulnerable to terrorism, higher population could wreck the environment, etc) is that they see a problem and go “see, we should stop here, there’s an unbreakable wall there, we’ll be fucked if we try and go through it”, while the real forward thinkers just see a problem to be solved with human ingenuity. The irony is that some people can be both problem-solvers AND doom-n-gloomers depending on what the issue is.

Caleb Jones

Posted at 12:31 pm, 14th March 2018I agree with that, but I wasn’t talking about that specifically, but many of the other cool things down the pike.

True, but there’s a lot of overlap.

Again, possible, but I don’t think that’s the most likely outcome. As Harry Browne famously said, don’t freak out about a future 1984, because big government doesn’t work. If there was a government camera in every room in your house, you don’t need to worry, because it wouldn’t work.

Tale

Posted at 01:49 pm, 14th March 2018Yeah I pretty much agree with all of this. The only thing I’d add is we can’t draw too many conclusions from past scenarios because of survivorship bias – this conversation can only exist in a world where we have a 100% past success rate at overcoming disaster situations. It’s possible thousands of civilisations have failed and the human race is just the guy at the roulette table who’s betting everything over and over again, and due to a ridiculous run of luck hasn’t gone broke yet.

Also I read this article recently, it’s very long so here are some of the key points:

https://80000hours.org/articles/extinction-risk/

Estimates:

Natural risk: 0.3% per century

Nuclear war: >0.3%

Climate change: >0.3%

AI disaster: 4%

What’s probably more concerning is the risks we haven’t thought of yet. If you had asked people in 1900 what the greatest risks to civilisation were, they probably wouldn’t have suggested nuclear weapons, genetic engineering or artificial intelligence, since none of these were yet invented. It’s possible we’re in the same situation looking forward to the next century. Future “unknown unknowns” might pose a greater risk than the risks we know today.

Dr. Toby Ord, who is writing a book on this topic, puts the risk in the next century at 1 in 6 — the roll of a dice.

ooops britney

Posted at 04:37 pm, 14th March 2018He was being ironical, I surmise.

[By the way: who’s surprised to see every commenter is male? Not I… Those other ones are all taking selfies, and sharing how #happy they are to their 993 very very dear friends. Huh.]

kevin

Posted at 12:28 am, 15th March 2018Wow

I do not like any of these scenarios

how is my born in the 20 century body and personality going to stay interested and engaged at age 50,80,120′ or 250?

BD you posted about your elderly relative who had trouble learning to use a mouse

why can we expect we would do better?

go onto you tube music section and you can find people who swear that pat boone was the greatest performer of all time.kids today just do not know good music.

are these the same people who can become cyborgs?

some people in 2040

would be happy to watch reruns of he-haw and drive chevy vegas

not much progress there

the only thing I could think would work is to terraform an asteroid with a self limiting AI that would die off after a certain number of cycles

Caleb Jones

Posted at 11:35 am, 15th March 2018Very good point.

Wow, disagree with that assessment. That’s way too low.

Another very good point, yes.

1. Technology.

2. Vastly higher medical technology and quality of life.

Because 50 years from now men in their 70’s-80’s won’t look or act anything like men in their 70’s-80’s today. Read this.

Cyx

Posted at 03:13 pm, 15th March 2018Hey BlackDragon,

This post is a fun read for sure, but I think you’re way off the mark here.

Artificial Intelligence is a Math thing. Computers are very good at doing a lot of simple operations quickly. More complex functionalities that humans take for granted, such as facial and pattern recognition, have to be broken down into complex math models and algorithms. You end up with many tasks, for instance Chess, that no perfect answer can be found. One can only improve their guesses by working more on them. Computer-based innovation stems from new ways of thinking, increased computational power, and clever tricks.

One simply cannot take over someone else’s computer. Computer security is often based off of solving complex math problems. Knowing “secrets” in a security scheme lets a computer hide things securely and quickly, but those who don’t know the secrets will have to spend a lot of time trying out different answers. Again, it’s all about the human using the computer. Some security schemes are so good that even supercomputers will not break in a reasonable amount of time.

Basically, we are still monkeys, but with better toys. Overcome these limitations would revolutionize not just Computer Science, but all Math theory as well. Basically, BlackDragon’s going to become monogamous again before this ever happens.

The regression of mankind also will not happen in a long-term scale. Many “crazy” SJW’s and other people you see are reasonably intelligent. Societal Programming merely removes wisdom from a group of people. Take their children, raise them properly, and they will turn out well. Genetic expression of IQ is very complicated, so evolving intelligence away must occur over a long period of time. If intelligence genes were such a liability in a developed human society, it would have disappeared with our ancestors.

One final thing to note is how human nature does not change even with the advent of technology. Even though cryptocurrency is based off of efficient computer technology, the scheme cannot work unless massive amounts of computational power and electricity are spent (“wasted”) on mining. Fusing with AI may change some social aspects, but a human who has the thoughts of 1000 years is still a human. Racism and other currently obsolete biological wirings will still resurface in the future.

Cyx

Posted at 03:20 pm, 15th March 2018Hey BlackDragon,

This post is a fun read for sure, but I think you’re way off the mark here.

Artificial Intelligence is a Math thing. Computers are very good at doing a lot of simple operations quickly. More complex functionalities that humans take for granted, such as facial and pattern recognition, have to be broken down into complex math models and algorithms. You end up with many tasks, for instance Chess, that no perfect answer can be found. One can only improve their guesses by working more on them. Computer-based innovation stems from new ways of thinking, increased computational power, and clever tricks.

One simply cannot take over someone else’s computer. Computer security is often based off of solving complex math problems. Knowing “secrets” in a security scheme lets a computer hide things securely and quickly, but those who don’t know the secrets will have to spend a lot of time trying out different answers. Again, it’s all about the human using the computer. Some security schemes are so good that even supercomputers will not break in a reasonable amount of time.

Basically, we are still monkeys, but with better toys. Overcoming the limitations of solving many tasks would revolutionize not just Computer Science, Math, and every other intellectual discipline as well. Basically, BlackDragon’s going to become monogamous again before an AI takeover happens. Sometimes, it’s a case of not being able to produce something out of nothing.

The regression of mankind also will not happen in a long-term scale. Many “crazy” SJW’s and other people you see are actually reasonably intelligent. Societal Programming merely removes wisdom from a group of individuals. Take their children, raise them properly, and they will turn out well. Genetic expression of IQ is very complicated, so evolving intelligence away must occur over a long period of time. If intelligence genes were such a liability in a developed human society, it would have disappeared with our ancestors.

Likewise, human nature does not change even with all the technological advances we have. Even though cryptocurrency is based off of efficient computer technology, the scheme cannot work unless massive amounts of computational power and electricity are spent (“wasted”) on mining. Fusing with AI may change some things, but a human who thinkgs for 1000 years is still a human. Racism and other currently obsolete biological wirings are merely suppressed by technology. They will likely resurface even in a futuristic society.

Caleb Jones

Posted at 03:21 pm, 15th March 2018I don’t disagree with a thing you said, so I have no idea where we disagree.

Cyx

Posted at 05:12 pm, 15th March 2018For the reasons I stated, I think it’s unlikely that AI will destroy us, intentionally or not. An intentional takeover would involve a very extensive conspiracy to create backdoors in every computer and weaponize AI. An unintentional one is based off of assumptions that do not reflect our understanding of computing.

I think that the future will remain in flux between the ranges Scenarios 3 and 5. Neither case prevents the other from happening and are supported by human history.

Scenario 4 is already happening to an extent, but there will still be many tasks that cannot be solved even with exponential gains in computational power. There is still the concern of the physical world, and physical resources cannot be procured exponentially.

Caleb Jones

Posted at 05:35 pm, 15th March 2018I only think it has a 30% chance of happening. So again, I don’t think we disagree, or if we do, it’s not by much.

Freevoulous

Posted at 03:51 am, 16th March 2018Caleb, you wrote:

I highly disagree with that, due to, what I would call, Civilisational Free Market.

It is next to impossible for the WHOLE world to regress at the same time, especially because regress of one nation/culture/civilisation means profit for another one.

For example, if the US and EU collapse in the next 50 years, China will face hardships because of that for a while, but then steadily GAIN riches, power, and technological progress thanks to being the dominant power.

So regardless of the local regressions, the humanity as a whole will progress, since there will be always someone, somewhere who is benefiting from the collapse of others.

Heck, even in the worst case scenario, if the modern powers nuked each other in a WW3 kind of scenario… New Zealand would simply reconquer the irradiated wasteland in several generations, with Kiwi guys in rad-proof suits scavenging the remains of US/EU for resources, and making NZ a post-scarcity paradise of super-technology and progress 🙂

Antekirtt

Posted at 09:06 am, 16th March 2018Umm, no. Nuclear winter.

But I agree that while certain things can make part or even the whole humanity collapse, very few things can truly wipe us out (AI, 20-mile asteroid too big for our nukes and detected too late,…).

There’s another problem however: if something doesn’t kill us all but throws us back to the stone age, it may be impossible to replicate the industrial revolution, because the easy stuff has already been mined (and part of the hard stuff), so you’d have a catch-22 where in order to get industrialized you need industry to reach resources now harder to access. Try googling “You only have one shot _Civilization _ mining metals”.

Taken from another angle, you’d have to go from neolithic or medieval times to nuclear/solar power in one huge jump without the fossil fuel transition (though biofuel may help a little), because humans who forgot industry are unlikely to go digging 200+ meters for magical materials they don’t even know exist and work. No medieval fracking.

Cronos

Posted at 10:37 am, 16th March 2018Yeah, I also think there is some chance of regression. In several areas, you can argue (as blackdragon says) that the western world is already in decline, so thats certainly a possibility.

As BD said in his post on free universal income, it is likely that at some point in the upcoming decades, technology will make the cost of living so cheap that the government will be able to take care of everyone’s basic needs. The idea of having to work to make a living will be a thing of the past.

Two things can happen:

1. People will dedicate their time to art, literature, and other creative endeavors. Making will enter some kind of Golden Age.

2. Since people no longer have to work, they will just do nothing. Most people will just sit on their asses all day watching TV, playing video games, and smoking pot. This actually describes a lot of young people, so thats very possible.

Speaking about uploading your consciusness in some kind of computer simulation, I would also be careful with that, since it means that the persons controling the simulation also controls 100% what happens to you. What if some evil maniac decides to torture you eternally? That would be worse than death.

Caleb Jones

Posted at 10:46 am, 16th March 2018It can if there is a catastrophic event that causes world-wide damage but doesn’t wipe out the entire human race, as I stated in the article.

True, but some argue the theory that 90-95% of mankind will regress, while, as you say, a small percentage of evolved / smart / rich people will end up ruling over the orc barbarian masses. I would still include that scenario as “regression.”

Eric C Smith

Posted at 02:47 pm, 17th March 2018sweet so at this point all i have to do is keep my brain. Do tell about the aspects of our humanity we’d be losing? and as a tech human I hope that leads to space travel and all sorts of fun things.

Caleb Jones

Posted at 04:48 pm, 17th March 2018If most of your body was made up of robotic parts, you’d probably lose some of your humanity. Double that if they surgically remove your brain and put it into a robot, or even more extreme, a glass jar where you’re in the Matrix forevermore (but know it, and choose to be there).

The question is not whether or not you’d lose some of your humanity. The question is, I think, if at that time, whether or not you’d mind.

Throughfare

Posted at 04:07 pm, 19th March 2018@Antekirtt

Just so you know, the “Nuclear Winter” concept is bullshit. No professional scientists take it seriously any more:

https://www.nytimes.com/1990/01/23/science/nuclear-winter-theorists-pull-back.html?pagewanted=all